The CRO arena has been moving and shaking since its early beginnings; constantly challenging itself to adapt and optimize (Convenient, right? Optimizers optimizing their own industry?). Lately, there has been a lot of buzz surrounding testing; specifically, how big should we test?

Some clients want to move hard and fast testing radical changes very frequently while other clients want to move more slowly at a safer testing decibel: big vs. small.

While there may be steadfast rules surrounding testing, whether you should “go big or go home” should be based on a myriad of factors. Today, I’ll elaborate on a few of these critical factors so that you can make a more informed decision and keep on testing!

What is the problem you’re trying to solve?

As with all testing, I would advise not to go into a test wondering what kind of “big” test you can run for the cycle. Determine what problem or friction your users are facing and then design a solution to fit their needs.

If they have a radical problem, they may need a big solution.

Example: If you see significant drop-off throughout your entire checkout process, it may be wise to test a new checkout flow and process all together.

However, if they only have a small problem, they may only need a small solution.

Example: If you notice that the bulk of users are clicking on an element with no correlating action, perhaps you should implement an action that speaks to that element.

How much traffic are you working with?

High traffic volume is a large testing sample size which means you can have more accurate results regardless of whether you’re testing big or small. With high traffic volume, you can also detect small impacts with stronger accuracy.

Low volume traffic has a higher chance of delivering inconclusive test results because the sample size does not have the power to detect subtle variations in behavior. This in turn, means that you may have to consistently perform big tests to produce clear results and clear key takeaways. This in and of itself is a risk because users may only need a small change to optimize their experience and they may respond quite negatively to a big change as a result.

How frequently are you testing?

The power of this question to determine how you test is greatly dependent on the nature of your users. It is also greatly dependent on your traffic volume.

Essentially, what are the odds that any given user could see multiple tests? If a user can see more than one test in a short amount of time, it would be best to mix small with big tests to prevent confusion and misalignment of expectations.

Example: If you have significant amounts of traffic that are returning users, frequently running big tests may disorient these users or teach them that you’re constantly changing the site and thus constantly changing yourself. Humans are not fond of inconsistency and breaks in pattern so I would not advise pursuing this route.

How much time can you dedicate to your testing?

Big tests are quite the time commitment. Big test set-up tends to be much more technical and granular; perhaps even more code-heavy. The QA process should also take much more time as a big test can more drastically affect the user experience and the entire domino chain that follows each user’s entrance into the site. This also ensures that launching the test and initial monitoring will occupy more of your time as well so that you can ensure this big test is not a short-sided, big disaster.

Small tests on the other hand typically require a short set-up time and a rather simple change that does not require as deep of a QA session to eradicate any issues.

Final Thoughts

- Test in order to solve an overarching problem for your users rather than trying to test “big” or “small”

- A big test can cause a lack of conclusion just as much as a small test can cause a big impact

I’ll leave you with examples of #2 in the flesh:

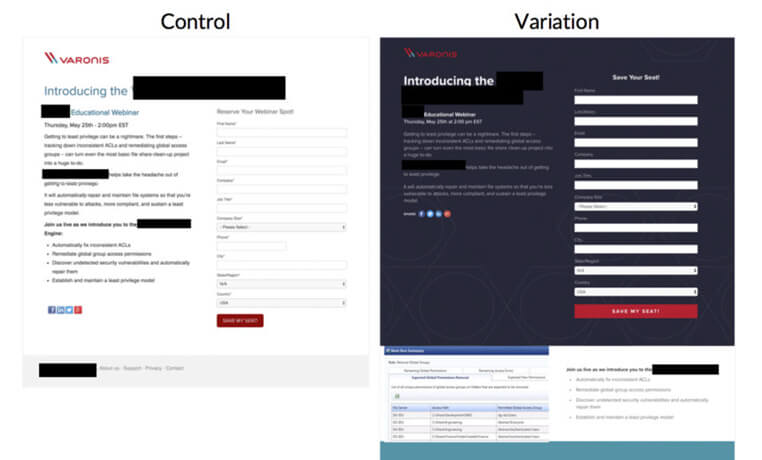

Big Test – Small Impact Example: (above) We tested a completely redesigned webinar landing page and our results were inconclusive. Granted, there were a lot of factors that lead to an inconclusive conclusion, but we proved that even a page redesign can sometimes leave users complacent and not create a noticeable impact.

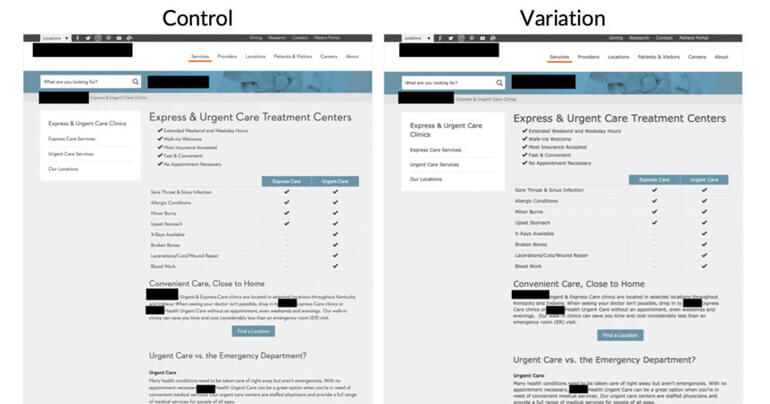

Small Test – Big Impact Example: (above) We impression tested a new font and saw a 75% preference for the Variation. While this was good enough we chose to push on and test retention as well with a 5-second test. There was an overall increase of 216.67% in the number of users that correctly retained highly-visible information.