Over the past few years, a major theme of Google’s updates to its ad platform has been to encourage more automated management. The release of Responsive Search Ads (RSAs) fits well within this trend, as the ad type dynamically combines ad assets to more closely match a user’s search term. But what effect do they have in practice? Do they actually improve click-through-rates (as we would expect more relevant ads to do)? And, if they truly match with a greater range of search queries, do they get entered in more auctions than their non-RSA equivalents? In order to test these hypotheses, we created 50/50 split experiments in the account of a client that sells industrial oils and lubricants.

A Quick Primer On Responsive Search Ads

Prior to discussing the experiment itself, a quick introduction to RSAs may benefit some readers. In contrast to Expanded Text Ads (ETAs), which display headlines and description lines in a static pre-determined order, RSAs are created by providing a variety of headlines and description lines and letting Google Ads determine the most effective combinations. Each RSA allows for a maximum of 15 headlines and 4 description lines. Headlines and description lines can be forced to a position to ensure ads are coherent and assets appear in a sensical order. In each auction, Google Ads will determine the best combination of assets based on historical data, the user’s search query, and device type.

Below is an example of the assets for one responsive search ad that was created for the experiment described in this post:

- Headlines

- Shop Mobiltherm 603 (pinned to the first position)

- Superior Customer Service

- Your Online Lubricant Partner

- Flexible Shipping Options

- Description Lines

- The Best Place To Shop Mobiltherm 603. Use Your Own Shipping Or Ship With Us.

- Offering Unsurpassed Expertise, Technical Advice & Support. Get Started Now.

- Unrivaled Service, With Hundreds Of 5-Star Reviews. Shop With Confidence.

The Experiment Set-Up And KPIs

In order to evaluate the effect of RSA inclusion in ad groups, we created experiments for 8 campaigns in the account. For each campaign, RSAs were created for each active ad group to rotate with the existing Expanded Text Ads (of which each ad group had at least 3). Ads were set to optimize for best performance rather than rotate evenly. The experiments ran for four weeks and saw nearly 30,000 impressions over that period.

Because this particular account is not limited by budget and the client’s goals are growth-oriented the main KPI that will be used to determine whether or not the RSAs inclusion in ad groups was beneficial is overall click volume. Hypothetically, by being more relevant to users’ search queries, RSAs should show in more auctions and see a stronger click-through-rate, leading to more traffic. Below we’ll examine whether or not that hypothesis was proven true.

The Results

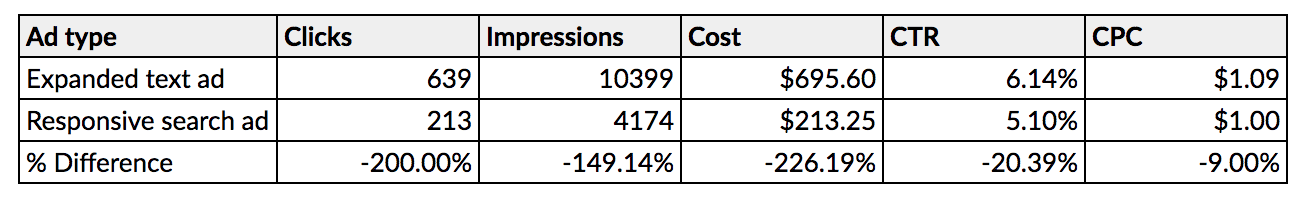

Let’s begin by taking a look at the performance of the RSAs themselves. The results in the table below are exclusive to the experimental campaigns that showed both the ETAs and the RSAs.

The most interesting result of this initial comparison is the differential in click-through-rate, which shows that the click-through-rate of the RSAs was more than 20% lower than that of the ETAs. While interesting, and evidence that the RSAs were not more relevant than our ETAs, I don’t think that this comparison is conclusive in that regard. There’s a lot of noise in this particular comparison, as the RSAs were shown much less frequently than the ETAs. Rather than comparing the performance of the ad types themselves, we should get more relevant data by comparing the campaigns that included/did not include RSAs. In other words, even if the RSAs performed worse than their ETA peers, it’s possible that their inclusion in ad groups nonetheless had a positive effect on performance overall. That comparison is below:

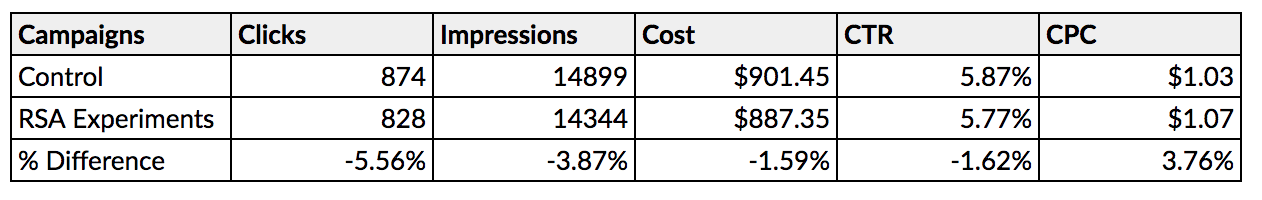

Unfortunately, in this case, the RSAs’ inclusion did not have a positive effect on aggregate performance. The results show moderate decreases in clicks, impressions, and click-through-rate from the experimental campaigns that included RSAs. While these results may not be statistically significant, they certainly don’t make a good case for applying the experiments moving forward. What might explain this poor performance? A few possibilities:

- The major “value-add” of RSAs is the promise that ads will more closely match users’ search queries. In this case, I suspect that this value is blunted by the degree to which we had already segmented keywords and ad text. In the campaigns experimented upon, each ad group is targeting a fairly narrow set of keywords with ad copy that was intentionally written to cohere to those keywords. Furthermore, none of these campaigns include any keywords of the broad criterion type, so a large proportion of matched search queries should have seen ads that included search terms in ad copy regardless of whether they were shown an ETA or RSA.

- Perhaps the quality of the RSAs was lower than that of the ETAs. Those extra headlines and description lines that were included may just have not been as compelling as what was present in the ETAs. I don’t believe this was the case since the RSA copy was in most cases identical to copy that was already present in the ETAs, but it nonetheless should be mentioned as a possibility.

- Google’s page on RSAs includes the following statement: Over time, Google Ads will test the most promising ad combinations, and learn which combinations are the most relevant for different queries (italics added for emphasis).” Perhaps, then, the RSAs simply did not have enough time to gather the data necessary to improve performance. The comparison below shows just the last two weeks of experimental data, and it does appear that the experimental campaigns that included RSAs may have improved over that timeframe compared to the entire performance window shown above.

Conclusions

The experiment described above details results for just one account, and there are a variety of variables that may affect performance in the accounts that you manage. Therefore, I encourage you to set up your own experiments rather than assuming that RSAs will or will not improve performance. When setting up the experiments and evaluating results, though, I highly encourage that performance is evaluated based on the effect of the RSAs inclusion in ad groups, rather than the performance of the RSAs themselves. For most accounts, whether or not RSAs outperform your status quo ads is a less important question than whether or not including RSAs improves performance overall.

For further discussion of Responsive Search Ads, check out Hanapin’s Matt Umbro and Directive Consulting’s Garrett Mehrguth webinar on the subject. For a more detailed description of what RSAs are and how they operate, check out our blog post summarizing their release. And, if you have questions or want to share your own experience with this new ad type, reach out to @ppchero on twitter