Back in the olden days, ad copy testing consisted of adding exclamation marks or using proper case. That was also when you only had one headline. Times have changed and so has the sophistication of ad testing. I’ve compiled a list of things to take into consideration for your next ad test.

Draft a Google Experiment or run test in the current ad group?

After talking to several people and clients I’ve determined it depends on your preference. Using experiments is a good way to allocate a portion of your budget to your test and decide if you’d like users to see only your test, original ad or make it random. Not to mention, if you prefer less cluttered ad groups, your experiments are housed in their own experimental campaign. So then why would you want to run a test without experiments? Perhaps you’d like to see how Google treats your ads by using machine learning.

How to decide where and what to test?

It’s obvious to start testing where performance is poor, but which metrics should you compare? CTR and conversion rate should always be two of the metrics you should pay attention to, after all, they represent how users respond and convert to your copy. The first metric to pay attention to will depend on your goal, it could be conversions, cost/conversions, or ROI.

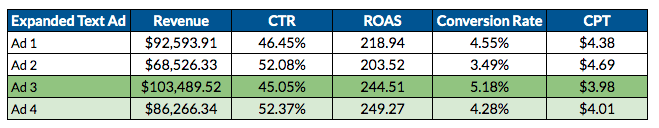

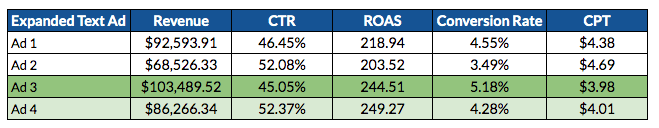

Using ROAS as our goal, we can see in the table below ad 4 has the highest ROAS and highest CTR, great so we’re making money AND the copy resonates with users. However, if we also take into consideration conversion rate and CPT, ad 3 is the clear winner. We’re still making money; the copy converts at a higher rate AND we’re saving money with a lower CPT.

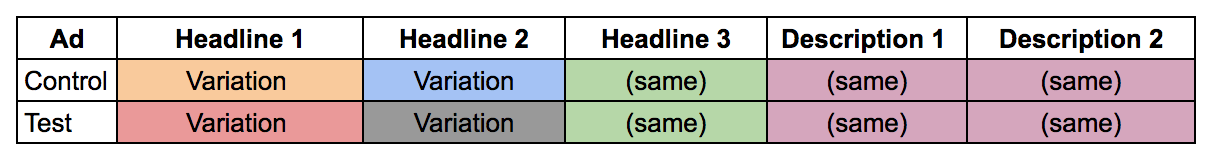

Great, now you have your winning ad, make it your control. Now decide if you’d like to conduct your test in the headlines or description lines. If you decide to conduct your test in the headlines, make sure it fits within headlines 1 & 2. Headline 3 is known to show less often which can skew your results. So, what do you test? Add a unique value proposition, exude brand authority, or try dynamic keyword insertion (DKI). There are so many possibilities, but hold on, you don’t want to add too many variables to your test. Then you won’t know if it was the personalization of DKI or your unique value proposition that worked.

Ok, so how do I know when to end my test and declare a winner?

Best practice is to run your ad for a minimum of two weeks, but will that be enough? That will depend on the amount of traffic you’ve received and if your ad reached statistical significance. There are also other factors you should take into consideration. Significant budget changes, previous campaign status, seasonality, and promotions.

If you used Google Experiments it’ll tell you at the top if you’ve reached statistical significance. Or you can use AB Testguide or any other ad calculator, just be sure to stick to one throughout your test. If at the end of your test you found a winner, great, move on to another test. No winner? Great, move on to another test. Either way, you still have results that will point you to your next step, keep on testing.