For even the newest face in the digital marketing world, it comes as no surprise the amount of attention mobile has garnered in the past few years. Ours is a rapidly evolving industry – what was once an unexplored frontier has quickly become a staple. It’s not to say mobile advancements have become less frequent or noteworthy over time – on the contrary, shifting to reflect the mobile-centric world has sparked even greater curiosity on the topic.

To name just a small sliver of mobile-focused updates in the last several months alone, we’ve seen the introduction of device-specific bid adjustments in search, Promoted Places for Google Maps inspired by mobile search tendencies, and Bing’s mobile app install ads pilot. Trends indicate 2017 may even be the year mobile emerges above desktop in terms of industry-wide ad spend – as this post from last week suggests – with the “mobile-first” attitude becoming ever more prevalent with each coming year.

This leaves marketers with the question: how should we adapt to meet this demand and achieve the best possible results from it? The updates mentioned provide us with more tools and robust techniques for meeting this challenge, but it can be difficult to know what’s right for the clients or accounts we work with. In this discussion, we’ll focus our attention on device-specific bid adjustments as a strategy for accommodating differences in desktop and mobile behavior, analyzing whether these are effective compared with splitting mobile traffic into their own campaigns for more precise control.

Device Bidding And Mobile Campaigns

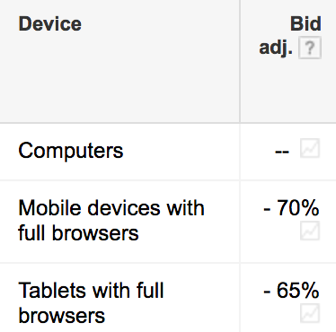

For the uninitiated, a device bid modifier applies a percentage increase or decrease on your bid according to whichever device type a searcher uses. To provide examples, a -60% adjustment on mobile would decrease a $2.00 bid to $0.80 for mobile users, whereas a 120% modifier on desktop would raise that bid to $2.40.

Given that mobile users tend to search, interact with websites, convert, and ultimately provide value differently than those on desktop, bid adjustments are useful for ensuring you obtain the most qualified traffic for meeting your goals.

However, mobile’s growing presence has created new demands and questions that can be tricky to answer with bid modifiers alone. For instance:

- Should mobile be given its own budget?

- Does mobile require specific CPCs to remain a worthwhile investment?

- Is mobile behavior different enough that it would benefit from a unique strategy?

In these cases, a perhaps more elegant solution arises in mobile-exclusive campaigns, in which such problems are addressed with more ease due to the control provided by a separate campaign. These are issues we considered in some accounts here at Hanapin, so we decided to test this tactic. Below are the results of a couple of our ongoing tests with mobile-only campaigns along with an investigation on the story emerging from the data.

Case Study #1

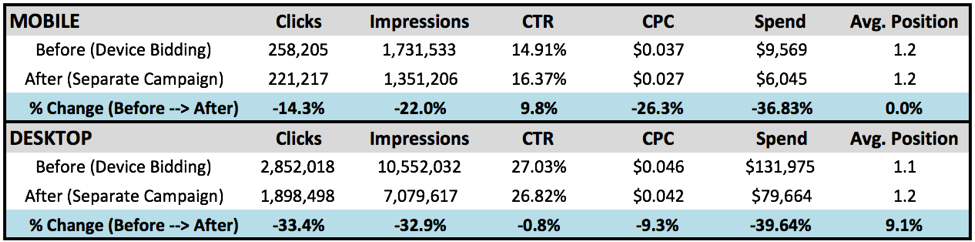

The question that inspired us to consider mobile-specific campaigns for this account was how to meet different budget and CPC goals for either desktop and mobile devices. We focused on a single, top-performing, non-branded campaign containing only a few keywords, choosing this one based on the impact it would have while minimizing complexities introduced with a more elaborate campaign structure. Prior to splitting this campaign into mobile and desktop specific versions of itself, we had implemented device bid adjustments for CPC differences, and the data from both endeavors are included for comparison (“Before” and “After” in the included table). The following results encompass approximately two months of testing, with the original campaign data pulled from an equivalent timeframe before the switch.

Note there are no conversion metrics to compare in this instance. Setting aside the overall decline in traffic resulting from budget changes between the two timeframes, we can observe some modest performance changes correlated with the split into mobile and desktop-specific campaigns. Mobile CTR improved just short of 10% upon being granted its own campaign whereas CPC dropped by a larger margin, all while retaining the same average position. We also compared desktop to see any effects there, although the differences were slight – including a small bump in position accompanied by minor reductions in CTR and CPC.

An important reminder we must tell ourselves in our data-driven work is that correlation does not imply causation. As such, the above evidence isn’t enough to conclude mobile-specific campaigns bolstered performance as seen. This is especially true since the data comes from a single campaign in one account where other factors may come into play. A key takeaway from this example is that a mobile-only campaign ended up being a viable solution to the original challenge of better managing spend for mobile traffic, offering specific budgets and more flexibility than a campaign targeting all devices. In that regard, the test was successful and could merit further investigation across the account.

Case Study #2

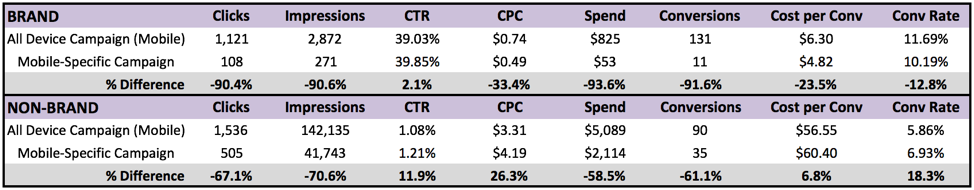

The second instance we implemented mobile-specific campaigns was for another account with largely the same goal in mind – we wanted to find ways to improve the budgeting process for different devices beyond what was possible with bid modifiers. In this scenario, several campaigns with the highest mobile traffic were split into their own mobile-only variants, with a mixture of brand and non-brand campaigns receiving such treatment. Such an approach, in addition to differences in industry, objective, and scale between the accounts in either example, provides further layers to evaluate the impact of mobile-specific campaigns. Here are the results we found within a single month:

Immediately, we can see this account has traditional conversions unlike the one in the previous example. Note also the discrepancies in volume for the mobile-only campaigns compared with the standard campaigns were planned (to minimize test impact) rather than the consequence of splitting campaigns. Even with traffic differences, we may still observe how performance varied between brand and non-brand terms. Both cost-per-conversion and CPC experienced drops in the ranges of 23% and 33% respectively for brand, with a small decrease in conversion rate. A reverse trend emerged for non-brand with higher cost-per-conversion, CPC, and conversion rate. In both cases, CTR improved by nominal amounts.

What does this information tell us? Although illuminating on the disparity of brand and non-brand campaigns, what we uncovered is more inconclusive overall, especially when compared with findings from the first example. Like that exploration, the goal of this test was less focused on whether mobile-specific campaigns would achieve greater results compared with campaigns utilizing device bid modifiers. Instead, we wanted to see whether these exclusive campaigns would offer greater control over mobile bids and budgets without significantly sacrificing conversion rates. Continued testing will be beneficial in resolving the debate, but the discoveries made thus far are promising for meeting this need while maintaining performance.

Concluding Thoughts

We return to our original question: when considering campaigns with device bid modifiers or device-specific campaigns, what should we choose for our mobile strategy? The data here provide some insights from our own forays into the matter and may help guide your own tactic. The important thoughts to consider are the common themes from both examples as well as the challenges you may face with an increasingly mobile-centric world.

In our scenarios, we sought methods for better addressing mobile spend, in which case mobile-specific campaigns are useful for the tighter control on budgets and bids they provide. Another benefit of these types of campaigns is their ability to truly support a mobile-specific strategy through flexibility in keywords, ads, targeting, or other details. With greater control, however, comes more intricacies in management and related difficulties, especially if you’re considering mobile versions of many campaigns. Furthermore, the impact of mobile-specific campaigns on performance may vary depending on the account and context, thus, testing to uncover unknowns is a good way to proceed before fully diving in.

It should be said the two strategies discussed are not mutually exclusive. You may find a mixture of campaigns with device bid modifiers and mobile-only targeting work well for achieving your goals. Ultimately, the best approach involves discovering what suits your account or business the most and tailoring your strategy accordingly.